Data

Filter customer data by type VIP/Regular

from pyspark.sql.functions import *

customer=spark.sql("Select * FROM workspace.customerdata.customer")

display(customer)

df.printSchema()

df1=df.filter(df["customer_type"]=="VIP")

df0=customer.filter(col("customer_type")=="Regular")

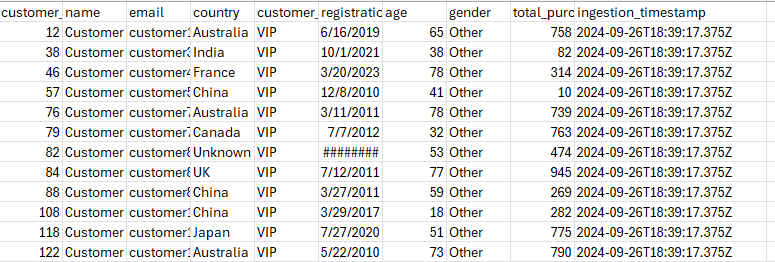

display(df1)

# filter by customer_type and country

df1=customer.filter((customer.customer_type=="VIP") & (customer.country=='USA'))

# where condition

df2=customer.where((customer.customer_type=="VIP") & (customer.country=='USA'))

# or operator

df3=customer.where((customer.customer_type=="VIP") | (customer.country=='USA'))

Add new column withColumn Function

from pyspark.sql.functions import *

customer=spark.sql("Select * FROM workspace.customerdata.customer")

customer =customer.withColumn("Salary", col("age")* 1000)

customer.printSchema()

display(customer)

# withColumn fuction

customer =customer.withColumn("Seniority", when(customer.age>50, "Senior").otherwise("Junior"))